In a region already gripped by war, despair, and siege, a chilling new dimension is emerging—one that is largely invisible, faceless, and perhaps even more terrifying, artificial intelligence.

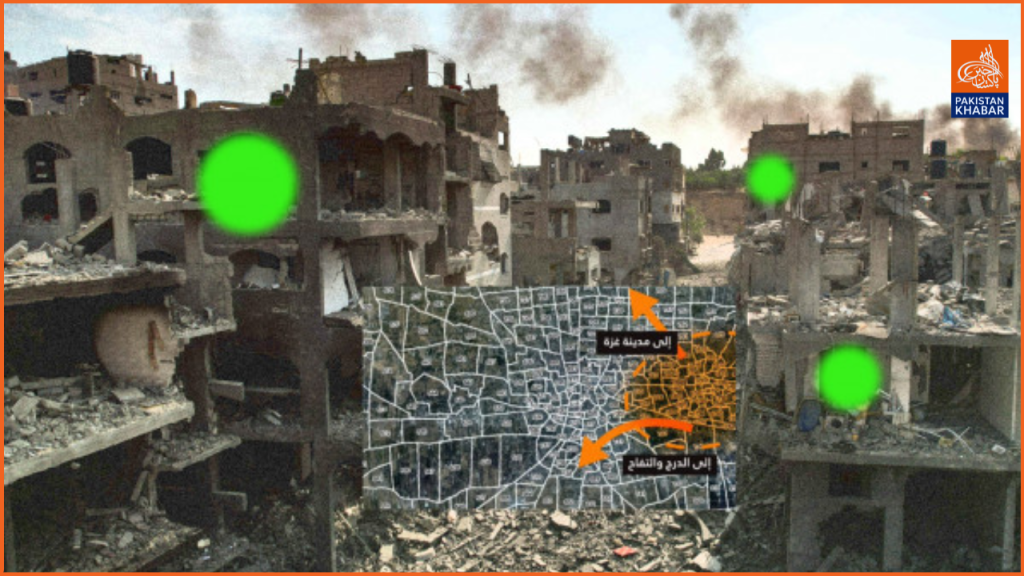

As the smoke rises over Gaza once again, there are growing reports that suggest Israel’s military strategy is being quietly reshaped by machine-driven decision-making. According to several investigative sources and leaked intelligence, AI systems are now not only supporting military operations but may be playing a direct role in identifying who lives and who dies.

At the heart of this issue lies a question that echoes across legal, ethical, and humanitarian corridors around the globe: Should a machine be entrusted with the power to make life-and-death decisions in war?

The Machine in the War Room

Recent accounts—many of which have been corroborated by military analysts and independent journalists—name two specific AI systems allegedly in use: Lavender and Gospel. These platforms are believed to scan vast streams of metadata, including intercepted communications, geolocation data, and digital footprints from phones, apps, and social networks. With this raw data, they reportedly generate real-time lists of individuals flagged as potential threats.

But how these individuals are selected remains largely secret. There’s little information on the algorithms, the thresholds for threat assessment, or the safeguards in place—if any exist at all.

In a densely populated enclave like Gaza, where civilians and combatants often live side by side, the margin for error is razor thin. And in this case, the margin might not even belong to a human.

When War Becomes Calculation

Supporters of such technology, mostly within defense circles, argue that these systems reduce risk to soldiers and increase operational speed and precision. AI, they claim, can process in seconds what human analysts would take hours or days to analyze—saving lives on the military’s side.

But opponents—human rights experts, legal scholars, and peace advocates—warn of a dangerous dehumanization creeping into the battlefield. What was once a commander’s decision, made with at least some level of moral deliberation, may now be reduced to a data-driven outcome. War, in this context, risks becoming a spreadsheet of strike probabilities—detached from the reality of civilian suffering.

A senior UN official, speaking off the record, noted: “The concern isn’t just about collateral damage. It’s about erasing the very notion of human accountability. Once the process is automated, it becomes harder to ask: who gave the order?”

The Fog of War—and Code

International humanitarian law demands that all military actions follow strict principles: distinction (between civilians and combatants), proportionality, and necessity. It is not enough for a target to be “possible” or “likely.” There must be clear and justifiable grounds for any strike.

The growing fear is that AI does not—and perhaps cannot—grasp these nuances. Machines don’t perceive grief, trauma, or the messy complexity of life under occupation. They operate on logic trees and pattern recognition. In Gaza, where entire families often live under one roof, where displaced people move frequently, and where communications are limited, those patterns can easily mislead.

If AI misidentifies a teenager livestreaming from a rooftop as a militant, or flags a mobile signal from a crowded market as a “hostile meeting,” who bears the blame?

Gaza: Life Under the Algorithm

From inside Gaza, the fear is not hypothetical. It is immediate and all too real.

“We are always being watched. Every ping, every message, every movement—we don’t know what could get us killed,” said a university student in Khan Younis, speaking under the condition of anonymity.

In a place already suffocated by siege and airstrikes, the added weight of algorithmic surveillance has deepened the psychological toll. Many Gazans now feel like they are trapped in an invisible web, one where even their digital shadows could become reasons for execution.

AI in this context does not feel like innovation—it feels like omnipresent judgment. One where there’s no trial, no defense, and no human face behind the decision.

Global Outcry and Moral Reckoning

The international reaction is slowly building. Amnesty International has issued statements urging governments to impose immediate bans or strict moratoriums on the use of autonomous weapons. Human Rights Watch has demanded a full accounting of the AI platforms being used in Gaza. Even within Israel, a handful of whistleblowers and civil society voices have raised alarms about the ethical consequences of letting machines take on what was once a moral burden carried by humans.

A joint declaration by a coalition of AI researchers from top global universities stated: “The delegation of lethal decision-making to algorithms represents an erosion of ethical warfare. It sets a dangerous precedent that could normalize algorithmic killing across future conflicts.”

Yet, so far, no state has stepped forward to push for enforceable international norms on this issue. Technology, once again, is outpacing regulation—and justice.

This Is Not Just a Gaza Story

This isn’t just a headline about a warzone. This is a story about the future of warfare, and perhaps the future of humanity itself.

What’s being tested in Gaza is not just a new kind of drone or a digital war-room assistant. It is a shift in the moral architecture of conflict. The shift from human conscience to machine calculation.

And with every life lost to an algorithm, a fundamental question becomes harder to ignore:

Have we begun to outsource our humanity to machines?

The debate is no longer about whether AI can kill. That moment has already arrived. The question now is whether we, as a global community, are willing to let it decide who should die.